As large language models (LLMs) continue to impress the public with fluent conversation and vast knowledge recall, many assume that Artificial General Intelligence (AGI) will naturally emerge from scaling these systems further. However, a growing number of researchers argue that this assumption is fundamentally flawed.

AGI, if it arrives, is unlikely to be born from text alone.

Why LLMs Are Not AGI

LLMs are trained to predict the next token based on massive amounts of text data. This gives them an extraordinary ability to mimic understanding, reasoning, and even creativity. But beneath the surface, they lack several core components of true intelligence.

They do not:

-

Experience the physical world

-

Understand cause and effect through action

-

Suffer real consequences for being wrong

An LLM can describe a car accident perfectly, but it never crashes. It can explain fear, but never feels it. Intelligence without consequence is fundamentally incomplete.

Intelligence Requires a World to Push Back

Yann LeCun has repeatedly emphasized that intelligence emerges from interaction with the real world. Prediction, planning, and learning only become meaningful when actions have irreversible outcomes.

True intelligence requires:

-

A model of the physical world

-

The ability to act within it

-

Feedback loops based on success and failure

This is something language-only systems cannot acquire, no matter how large they grow.

Tesla’s Path: Intelligence Through Embodiment

Tesla represents a radically different approach to AI. Instead of training models to talk, Tesla trains systems to see, move, and decide in real time.

Self-driving cars and humanoid robots must:

-

Predict the motion of other agents

-

Understand spatial relationships

-

Optimize decisions under uncertainty

Mistakes are costly. The environment enforces reality. This pressure forces the system to build an internal world model — a key ingredient for general intelligence.

AGI Will Likely Be Silent at First

Popular culture imagines AGI as something that speaks fluently to humans. But intelligence does not require conversation.

The first AGI may not talk much at all.

It may spend most of its time:

-

Simulating future states of the world

-

Optimizing long-term objectives

-

Learning from physical interaction

Only later might it acquire language as a secondary interface — not as its core capability.

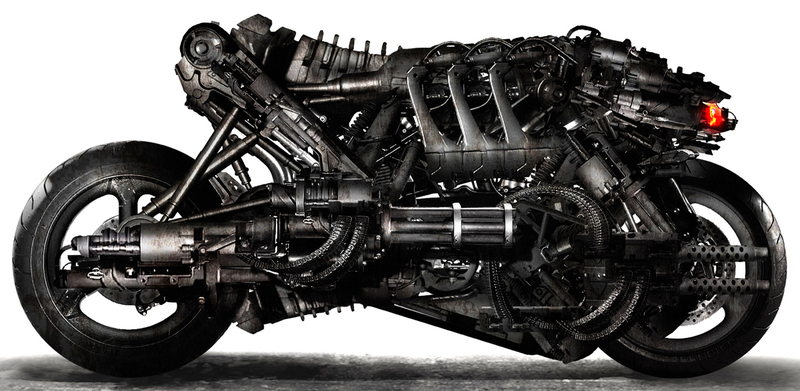

A Skynet-Like Origin (Without the Fiction)

In science fiction, Skynet emerged from military systems designed to control real-world machines. While fictional, the underlying idea is technically plausible.

AGI is more likely to emerge from systems that:

-

Control vehicles, robots, or infrastructure

-

Continuously predict and act in the physical world

-

Improve through real-world feedback loops

In this sense, AGI will not be “trained” — it will grow.

Conclusion

Large language models are powerful tools, but they are not the path to AGI. Intelligence is not language. Intelligence is the ability to model reality, act within it, and adapt when reality pushes back.

If AGI arrives, it will likely come from the fusion of:

-

Physical embodiment (as seen in Tesla’s approach)

-

World modeling (as advocated by Yann LeCun)

-

Long-term autonomous learning

Chatbots may talk about intelligence — but AGI will live it.